This week, I had the chance to speak with a group of users and observe them using the 8.0 version of WEM. This is a company that has been using the Vignette Content Management product for many years and is still experiencing some growing pains while transitioning to the updated user interface of WEM. The person doing most of the "driving" during the screen share session was a guy who had not used the new interface much and not at all for some of the tasks. He still uses the "console" interface, which admins can still access, instead of the new "workspaces" model, aimed mostly at end users.

It was an interesting and terrifying experience to speak with customers live like that. I wasn't alone either; in the room with me were three other members of the UX team, including the team lead. The customers were very nice and very vocal, quick to voice their opinions about all aspects of the UI, not just the picker tasks I was asking them to perform. We got a lot of good feedback on other issues, like the tree nodes indicating that there are child nodes when there aren't; direction on how they like to seek, search and browse for content in the system; and concerns with changes that were made to the preview site menus and controls.

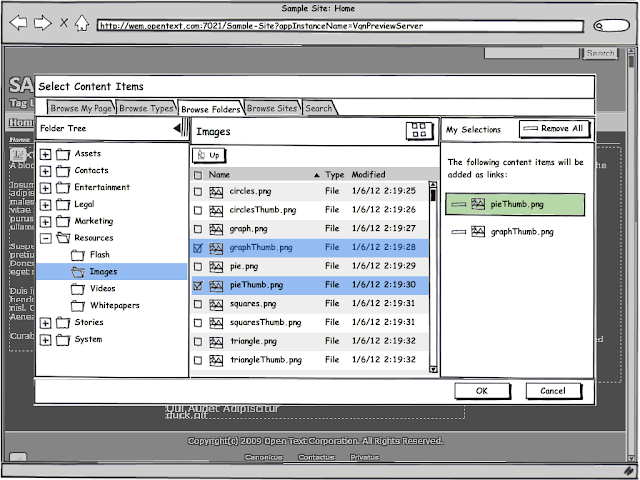

Most of what they about the pickers were known issues but it was still valuable for me to see people struggling with some of the UI inconsistencies. Still, after a little practice, the user seemed to perform repetitive tasks pretty easily, like remembering that you have to stop one node higher in the tree than the channel or folder you'd like to select. One UI suggestion he made had to do with adding content items to the "Your Selections" pane; he said that instead of using the "Add to Selections" button, he thought having an arrow pointing from the grid to the selections pane that the user would need to click to "move" the selected items might be better. I will keep this in mind in case my design idea doesn't test well.

I finished up my assessment of the four picker types this week and moved into my initial design phase. The first thing Robin asked me to do, though, was to write up scenarios for what I intended to design in order to make it clear what problem I was trying to solve. I wrote all my uses cases based on the "Kristen" persona that OpenText uses:

Kristen, a content contributor, for whom web content management is not the focus of her job. She enters content infrequently and needs the process to be easy and intuitive. She is not very computer savvy and is an expert in an area unrelated to the website like a nurse, HR administrator or legal assistant.

These are the four uses cases I will be designing for:

- Selecting a Folder for a New Content Item

- Selecting Channels for a New Content Item

- Adding a Content Item to a Content Component

- Adding Related Links to a Content Item

I started with use case one:

Kristen needs to enter a new

article into the content management system. After entering her content, she wants an easy

and consistent way to select a Folder for her article. She doesn’t want to see every Folder in the

system, only those she has access to where she might want to save her content

items. She thinks it might be useful if

she could search for Folder names instead of having to drill down to select

them. She thinks it would be a neat

feature if she could create a new Folder if she can’t find one that is

appropriate for her article. She needs a

clear indication of which Folder she has selected and clear direction for how

to save her selection.

Below are screen shots of the current design and then my first low fidelity mock-up:

|

| WEM 8.1 folder picker |

|

| My initial folder picker design |

Some of my design changes include:

- Moving the "selection" pane from the bottom to the right to be consistent with content item selectors

- Removing the "browse" pane and expanding the "tree" pane to accommodate deep hierarchies with minimal sideways scrolling

- Removing the "All Folders" root element to lessen visual clutter and to eliminate the possibility of collapsing the entire tree

- Removing the "plus" icons from tree nodes that do not contain child nodes

I purposely use "sketchy" design widgets (courtesy of

Balsamiq Mockups) and minimal color so that people viewing these designs do not get distracted by look and feel elements and can concentrate on functionality. For the final set of mock-ups, I will probably create high fidelity versions that use the WEM interface design.

My first milestone is next Friday at which point I should have mock-ups created for each of the four use cases above.